I introduce my typical philosophy to planning/organizing the testing effort for a project, in part, as one using a risk-driven testing approach. In a recent conversation with a client, they said they followed a risk-based approach to their testing, and was I referring to something different?

My initial reaction was to say no; they are pretty much the same thing. But then, in the cautionary spirit of asking what one means when they say they “do Agile”, I asked them to outline their risk-based approach and describe how it informed their testing effort.

In the following conversation, it became clear that we had different flavours of meaning behind our words. But, were the differences important or were we talking about Fancy Chickens?

Image source: Silkie by Camille Gillet and Sebright by Latropox [CC BY-SA 4.0]

I have spent years talking about risk-based testing (ack!). At some point, I began referring more often to risk-driven testing but continued to use the two terms interchangeably much of the time. However, I have always preferred “risk-driven testing”. At first, it was mostly from a phrasing point of view; “risk-based” sounds more passive than “risk-driven” because of the energy or call to act that the word “driven” implies. But at the same time, risk-driven always helped me think more strategically.

From an implementation point of view, I was thinking: risk-based testing means here are the relatively risky areas, we should spend time checking them out, in a “breadth and depth” prioritized, time-permitting manner, to make sure things are working properly. Whereas risk-driven testing investigates questions like: “is this really a risk?”, “how/why is it a risk?”, “how can the risk becomes real?”, etc. Risk-driven testing is not just about running a test scenario or a set of test cases to check that things are (still) working, it is about supporting risk management, up to and including running tests against the built system. And so, to me, risk-driven included all that was risk-based and more.

I don’t like to be “that guy” that introduces yet another “test type” or buzzword into conversations with clients or team members. But, I do like to make distinctions around the value different approaches to testing can provide (or not) and sometimes a label can be very helpful in reaching understanding/agreement… including with yourself.

This recent conversation got me thinking a little deeper (once again) about how others use the term risk-based testing and the differences I think about when considering risk-driven testing. Sometimes it even feels like they are two different things… Could I formally differentiate the two terms: risk-driven and risk-based? To succeed, there should be value in the result. In other words, the definitions of risk-driven testing and risk-based testing should, by being differentiated from each other, provide some distinct added-value to the test strategy/effort when one was selected and the other was not, or when both were included together.

In the spirit of #WorkingOutLoud, I thought I would take this on as a thought exercise and share what follows.

The question to be considered: “Can a specific, meaningfully differentiated (aka valuable), clearly intentioned definition be put behind each of ‘risk-driven testing’ and ‘risk-based testing’ so as to better support us in assembling our test strategy/approach and executing our testing?”

Defining Risk-Driven Testing and Risk-Based Testing

To work towards this goal, I will first define risk and risk management and then consider the meanings implied by “risk-driven” vs. “risk-based” in the context of testing.

What is a Risk?

Risk in software can be defined as the combination of the Likelihood of a problem occurring and the Impact of the problem if it were to occur, where a “problem” is any outcome that may seriously threaten the short or long term success of a (software) project, product, or business.

– Risk Clustering – Project-Killing Risks of Doom

The major classes of risks would include Business Risks, Project Risks, and Technical Risks.

Managing Risk Involves?

Risk Management within a project typically includes the following major activities:

- Identification: Listing potential direct and indirect risks

- Analysis: Judging the Likelihood and Impact of a risk

- Mitigation: Defining strategies to avoid/transfer, minimize/control, or accept/defer the risk

- Monitoring: Updating the risk record and triggering contingencies as things change and/or more is learned

– Risk Mitigation – Scarcity Leads to Risk-Driven Choices

A risk-value calculated from quantifying Likelihood and Impact can be used to prioritize mitigation (and testing where applicable).

Better to be Risk-based or Risk-driven?

Here I will brainstorm on a number of points and compare risk-based and risk-driven side-by-side for each.

| Risk-based | Risk-driven | |

| Restated | “using risk as a basis for testing” | “using risk to drive testing” |

| Word association | Based on risk Founded on risk Pool of risks |

Driven by risk Focused by risk Specific risks |

| Risk values | Used to prioritize functions or functional areas for testing | Used to prioritize risks for testing |

| At the core | Effort-centric Confidence-building to inform decision-making About scheduling/assigning testing effort to assess risky functional areas in a prioritized manner |

Investigation-centric Experiment-oriented to inform decision-making About the specific tests needed to analyze individual risks or sets of closely-coupled risks in a prioritized manner |

| Objective | Testing will check/confirm that functionality is (still) working in the risky area | Testing will examine/analyze functionality for vulnerability pertaining to a given risk |

| Role of risk | Don’t need to know the detailed why’s and wherefore’s of the risks in order to be able to test efficiently | Need to know all the “behind-the-scenes” of the risks in order to be able to test effectively |

| Primary activity | Test Management | Test Design |

| Input to | Release Management | Risk Management |

| … | … | … |

| … | … | … |

At this point, my next thought is that both Release Management and Risk Management are parts of Project Management, therefore Risk-based Testing and Risk-driven Testing are both inputs to informing project management and the stakeholders that project management serves. And although it seems that I am identifying how the meaning behind the two terms could diverge, ie:

- Pool of risks vs. specific risks

- Effort management vs. technical investigation,

- Testing functionality vs. testing risks,

- Efficiency vs. effectiveness,

- Planning vs. design,

- etc;

… I am wondering if I can approach this exercise from a truly non-biased perspective – it is feeling like I am trying a bit too hard to get Risk-driven Testing, as a term, to be different (and more important) than Risk-based Testing rather than simply identifying a natural divergence in meaning. Am I just creating a new kind of Fancy Chicken?

Familiar Thoughts

My attempt to separate these two terms reminds me of Michael Bolton and James Bach making their distinction between Testing and Checking for their Rapid Software Testing (RST) training course.

In “Testing vs. Checking“, Michael Bolton summarizes their view, in part, by stating:

- Checking is focused on making sure that the program doesn’t fail. Checking is all about asking and answering the question “Does this assertion pass or fail?”

- Testing is focused on “learning sufficiently everything that matters about how the program works and about how it might not.” Testing is about asking and answering the question “Is there a problem here?”

He goes on to also state:

- Testing is something that we do with the motivation of finding new information. Testing is a process of exploration, discovery, investigation, and learning.

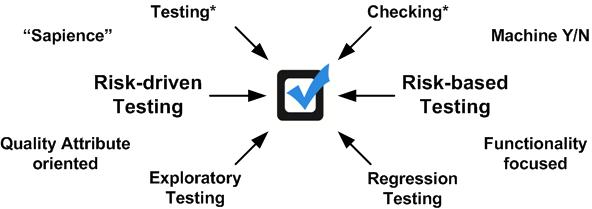

This, and their other related articles, led me to doodle the following comparison of how Risk-driven Testing and Risk-based Testing might align with different parts of the RST thinking:

* per the RST namespace circa 2009

The article quoted above is from 2009 and in following writings both Michael Bolton and James Bach have evolved their position and definitions. For example: James Bach has declared the use of “sapience” to be problematic and has introduced “human checking” vs. “machine checking”. You can read about that in “Testing and Checking Refined” and also see that Testing has now been (re)elevated to include these refined definitions of Checking. And in “You Are Not Checking“, Michael Bolton has tried to clarify that humans aren’t (likely) Checking even if they are…checking? They are clearly continuing to adapt their wording as they gain further feedback on their constructs. But, it also feels like wordplay: someone(s) didn’t like a word, so another is being put in its place and more discussion/argument follows and it often feels like that discussion ends up being more about the word(s) being used rather than about the usefulness of the concept they are trying to advance.

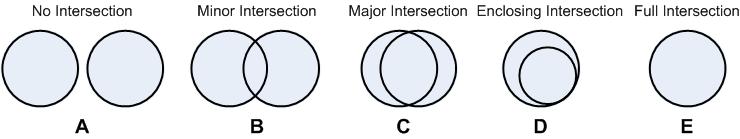

And here I am falling into the same trap. But even worse, I am trying to use the words “based” and “driven” (the third most important words out of three in each term) to make a division in something that is otherwise fundamentally the same thing (considering the other two words in each term are “risk” and “testing”); namely an approach to testing that uses risk to guide its planning, preparation, and execution – we are not talking about a new/different method/technique of testing, but an over-arching approach to testing. Instead of strengthening the whole, I feel like I am trying to pull the two terms apart just to be able to give Risk-driven Testing its own identity; It’s own lesser identity, ultimately, as it would need to leave some aspects behind with Risk-based Testing.

I am trying to force an “A”, “B”, or “C” when it is already a “E”, from a practical point of view. (If there was a third part to consider, then perhaps there would be a stronger case for differentiation and then maybe a need to name the whole as a new approach to testing!)

But before turning away from trying to separate the two terms, let’s see if anyone else is already trying to make a distinction between Risk-driven Testing and Risk-based Testing. Maybe someone has come up with a good angle…

Reinventing the Wheel, or the Mousetrap?

Apparently not. One of the difficulties or challenges in using Risk-based Testing or Risk-driven Testing is that these terms are both being used already and neither seems to be being used consistently or well. But I couldn’t find any uses where Risk-based Testing and Risk-driven Testing were being set at odds with each other – the opposite in fact; they are used interchangeably, or even in the same breath/line. But Risk-based Testing was the more common term used. Let’s look at some of those definitions…

Most descriptions of Risk-based Testing are vague/broad or touch primarily on prioritizing the scope of the test effort (and test cases) like:

“Risk-based testing (RBT) is a type of software testing that functions as an organizational principle used to prioritize the tests of features and functions in software, based on the risk of failure, the function of their importance and likelihood or impact of failure.”

– https://en.wikipedia.org/wiki/Risk-based_testing

This one sounds like how I was describing Risk-based Testing above. But it is the “cart-before-the-horse” usages of that definition I found, where the interpretation is to put focus on descoping tests once the schedule gets tight, or just talking about using risk to (de)select which test cases to run, that are discouraging and reinforce my desire to turn to using a “new” term, one that doesn’t feel so… tarnished.

We always have limited time, limited resources, and limited information and there is never going to be a project that is risk-free/bug-free. So we always have to be smart about what we do and find the cheapest/fastest effective approach to testing. Risk-based Testing can be part of the answer if one employs it as it is meant to be.

In “Heuristic Risk-Based Testing“, James Bach (1999) states that testing should follow the risks (akin to how I described Risk-driven Testing above):

- Make a prioritized list of risks.

- Perform testing that explores each risk.

- As risks evaporate and new ones emerge, adjust your test effort to stay focused on the current crop.

And in “Risk-Based Testing: Some Basic Concepts“, by Cem Kaner (2008), the organizational benefits as well as the benefits of driving testing from risks is discussed in detail based on the following three top-level bullets:

- Risk-based test prioritization: Evaluate each area of a product and allocate time/money according to perceived risk.

- Risk-based test lobbying: Use information about risk to justify requests for more time/staff.

- Risk-based test design: A program is a collection of opportunities for things to go wrong. For each way that you can imagine the program failing, design tests to determine whether the program actually will fail in that way. The most powerful tests are the ones that maximize a program’s opportunity to fail.

This last definition seems to combine my outlines of both Risk-Based Testing and Risk-driven Testing plus a bit more. I had not remembered to include the risk-based test lobbying ideas in the above table, though I certainly do this in practice. Looks like a winner of a definition.

It Can Mean a Wor(l)d of Difference

By changing one word, we can change the underlying meaning that is understood by others, for better or for worse. Will we cause understanding and clarity? Will we cause disagreement or confusion? Who does it help? Will it be just a word of difference or a whole world of difference?

I have been previously familiar with these definitions from Cem Kaner and James Bach and this exercise has served to remind me of them. If I find/found these Risk-based Testing definitions suitable, then why do I like to use Risk-driven Testing?

As mentioned at the beginning, maybe it is the energy, the “marketing” oomph! Maybe it was to feel alignment with Test-driven Development (TDD), Behaviour-driven Development (BDD), and other xDD’s. But because I often see that Risk-based Testing is weakly implemented, I think mostly I use it to better remind myself, and my teams, that we are supposed to be using risk as the impetus for our test activities.

This feels like the crux of my real issue: using risk. Where is the risk identification and risk analysis on most projects? Sure, many PMs will have a list of risks for the project from a management point of view. But where is the risk identification and analysis activities that testing can be a part of; so we can better (1) learn about and investigate the system architecture and design, so we can better (2) exercise its functional and non-functional capabilities and search for vulnerabilities, so we can better (3) comment on how risky one thing or another might be to the project, the product, and/or the business.

Maybe I should change the question to something like: “Are we performing Risk-based Testing if we don’t have a list of risks with which testing can engage?”

In my experience, the lack of a formally integrated Risk Management process is quite common for projects, large and small. In the face of that lack – the lack of risk-related process and artifacts, the lack of risk-related information – can testing be said to be risk-based?

Projects often prioritize testing without risk identification/analysis/mitigation/monitoring data as input. Someone says this is “new”, “changed”, “complex”, or “critical”. Common sense then tells you that there is some risk here. Therefore, testing should put in some effort to check it out. But without Risk Management, how do you know how much and with what priority? These are questions that can’t be answered in a calculated/measured manner without Risk Management. They can only be answered relatively speaking; this one is big, this one is small – test the big one until you are confident it is fine and/or we run out of time.

It seems that this is a prioritization approach where there are consensus-driven priorities based on some guiding ideas of what has changed and what has had problems in the past which could make things risky. We are then forced to use these best guesses/inferences to plan our efforts. Instead of Risk-based Testing, we could call it Commonsense Testing.

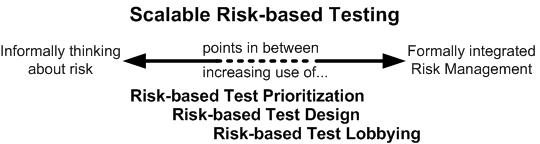

The point here is that when projects claim to use Risk-based Testing, many are not using an actual Risk Management process to identify, analyze, mitigate, and monitor a set of risks. And so, the functionalities being tested are not tied to a specific individual risk or small set of related risks. BUT, there is some thinking about risk – perhaps this can be considered the beginning; the beginning of Scalable Risk-Based Testing.

When discussing the ability and need to scale the rigour of testing and which test planning and execution techniques can be employed, the role of risk will be highly dependent on how formally risks are identified and managed. Using a scalable approach allows the project team to provide visibility as to what challenges may exist for the test team to be able to inform stakeholders about system quality – resulting in expectations being set on how the test team will be able to maximize their contribution to the project, given its constraints/context. [Ref: Scalable V-Model: An Illustrative Tool for Crafting a Test Approach]

This is a great opportunity for testing to advocate for Risk Management activities across the project (to the benefit of all) and to drive increasing use of Risk-based Test Prioritization, Risk-based Test Design, and Risk-based Test Lobbying. [Ref: New Project? Shift-Left to Start Right!]

Conclusion

Well, that was a bit of a walkabout and it didn’t finish up where I guessed it would, but thinking or working out loud can (should) be like that. This was a good confrontation of “my common language” that I have to reconcile against when speaking with clients and teams and resulted in a reconnection with the source definitions that “drove” my thinking on this topic in the first place.

I was expecting to say something here about how Fancy Chickens all look the same when they have been barbequed, eg: when the project gets hot… but maybe the observation at this point is that fanciness (feathers) can often be a cover for the actual value, deceiving us (intentionally or not) into thinking we are getting more than we are (a chicken).

Going forward, I will be looking into more formally capturing details of what I will now refer to as my “Scalable Risk-based Testing” approach, and seeing how I can apply it to that client situation that prompted this whole exercise.

On your own projects, why not think about how you use risk to guide the testing effort and, vice-versa, how you can use testing to help manage (ID/Assess/Mitigate/Monitor) individual risks.

In the meantime, regardless of the labels you give your test approach and project processes: get involved early, investigate and learn, and do “good” for your project/team.

And remember…

It’s the thought that counts – the thought you put into all your test activities.