Before I started my career as a tester, I was a scientist doing research in particle physics, and my years in science trained me to be skeptical, inquisitive and open to new approaches. It was the idea of continuous learning and exploration, always working toward the next discovery that attracted me to science in the first place.

I’ve found many parallels between science and testing, but lately I’ve started feeling that maybe we’re getting stagnant. I go to conferences to be inspired and get new ideas, but it’s starting to feel like I’m hearing the same things over and over again. What are the new ideas and trends in testing? What’s a new approach or technique that you’ve tried lately?

I think there is currently a lack of testing innovation, and testing as a profession is not evolving as fast as I would like it to. In this article, I’ll try to frame the problem as I see it, but I’m not going to give you answers or solutions – my goal is actually to raise questions. And hopefully, convince you to help me start a test revolution.

Stating the Problem

We live in a world where Moore’s law1 describes an incredible development of hardware capabilities, but where software also keeps getting more and more complex, and tools and methods are continuously evolving. From a software development perspective the world seems to just keep spinning faster and faster, and in more and more intricate patterns. Is testing really keeping up with the advances of development? Are our testing approaches evolving as quickly as the new technologies, or is testing being left behind, using the same methods and techniques we were using a decade ago?

Thinking Inside the Box

How do you usually approach testing? Like in so many other situations, we often simply revert to doing things the way we did last time. It seemed to work then, and why change something that’s working? We use what we already have in terms of test tools, test environments and test data. How often do we instead question the habits we’ve developed?

Have you ever heard – or said – “it can’t be tested”? If it’s part of the product, and expected to be used, it likely can – and probably should – be tested. But it’s easy to shy away from testing problems where there is no obvious solution and use the excuse that it “can’t be done”.

We sometimes do a great job of limiting ourselves, developing boundaries we decide can’t be crossed and creating a comfortable little testing box that we hide in. Can we lift ourselves out of that box, and should we try to?

The Solution

Testers in general, and agile testers in particular, need to get more innovative, and find new ways to test more efficiently, and effectively. I think we should start a test revolution! I want testers to be more creative and come up with new ideas, not only at execution time but also when we start planning testing. To become more creative and innovative, testers should ask for input from all team members and try to make the test planning a group effort.

Quality is a Team Effort

Quality is a team effort – it’s everyone’s responsibility. Testing itself cannot increase quality – as a tester I can inform you of potential issues, but I don’t fix them, or even decide if they should be fixed. Testers are not gatekeepers. Testers are carriers of information. Don’t forget – we all have the same objective regardless of role: building a high-quality product! We’re on the same side.

The first thing to do before we start testing is to understand why we are testing – what does quality mean to us?

Is it a product that is:

- Functional

- Fast

- Reliable

- Compatible

- …?

Testers don’t get to make that call – it should be a collaborative activity that involves the whole team. The quality criteria that we decide are the most important will then guide the focus of testing.

Next we need to look at the product risks – what are we worried about? What do we think might go wrong? Testing can help us feel more comfortable and confident with our product by mitigating risks through testing, thereby helping to build a higher quality product. Everyone needs to contribute to the risk analysis to add his or her own unique perspective. A developer will see different risks than a tester, etc. Risk analysis is a team activity.

Time to Test

Let’s assume we have a joint understanding of what quality means to us, and we’ve agreed on a set of product risks that we want to mitigate by designing tests that can reveal the corresponding failures. Now what? How do we actually test?

This is where we should try to think more in terms of what we need and not what we have:

- What tools do we need – not “what tools do we have”

- How much documentation do we need – not “what documentation do we usually produce”

- How will we generate test data – not “what data do we have”

- What test environments do we need – not “what environments do we have”

Is this where the teamwork ends? Not at all! This is where we need to be innovative, and creative, and work together – as a team – to come up with new ideas. If we need a tool that we don’t have – how can we get it? Can we buy it, or build it?

Follow your Nose Testing

Nobel laureate Dr. Michael Smith at UBC advocated “follow-your-nose research” in his field, biotechnology; he was willing to pursue new ideas even if it meant that he had to learn new methods or technologies. Similarly testers should do “follow-your nose testing”, exploring new approaches and questioning old habits. “Following your nose” means trusting your own feelings rather than obeying rules and convention or letting yourself be influenced by other people’s opinions.

If the first question is “What do we need to test?” then the second question is “How can we do that?”. Sometimes it might not be worth the effort – or even doable – but we need to at least consider it, without letting technical obstacles, accepted so called “best practices” or culture stop us from discussing new and potentially conflicting suggestions and ideas. The tester role is not static – we need to continuously update our skills, learn new tools and methodologies. But where and how do we find these new ideas?

Brainstorming

The most common approach to generating innovation and stimulating creativity is typically brainstorming. There’s only one problem with that. Brainstorming doesn’t work.

Brainstorming is the brainchild of Alex Osborn, who introduced “using the brain to storm a creative problem” in his 1948 book “Your Creative Power”2. The most important rule of a brainstorming session is the absence of criticism and negative feedback, which of course makes it very appealing – it’s nice to get positive reinforcement.

Brainstorming was an immediate hit, and almost 70 years later we’re still using it frequently. However, in 1958 there was a study at Yale University that showed that working individually rather than in brainstorming sessions generated twice as many ideas – brainstorming was less creative!3 This has later been confirmed by numerous scientific studies.

The biggest problem with brainstorming is groupthink – our decision-making is highly influenced by our desire for conformity in the group. We tend to listen to other people’s ideas instead of thinking of our own, which also means that the first ideas mentioned get favoured. Collecting the brainstorming participants’ ideas in advance counter this to some extent, and also helps introverts take a more active part in the discussion.

The original concept of brainstorming also limits debating and criticism, but debate and criticism don’t inhibit ideas: they actually stimulate them. A debate is a formal discussion on a particular topic in which opposing arguments are put forward. The whole idea is that people participating have different opinions. Debating should be done respectfully, but the aim is not to be agreeable. Criticizing is about providing feedback, but doesn’t in itself imply that the feedback is negative. It should be a constructive approach to weighing pros and cons against each other.

Innovating Testing

Innovation is stimulated by a diverse group that debates, criticizes and questions ideas. Make sure to include everyone on the team. Problems need to be approached from different angles. Quality concerns everyone, and remember – we’re a team and we all have the same goal, so we should be cooperating and working toward the same end. That means that everyone has a vested interest in testing. And everyone should have a right to provide input on test planning. Including non-testers means including people who don’t know why something can’t be done. Innovation is about asking “Why?” and “Why not?” Let’s not limit ourselves.

Sometimes innovation requires that you dare throwing everything away and starting over. Innovation isn’t always the newest thing: innovation can be using old concepts in new combinations or new ways. Innovation is not always a huge change either – it can be small improvements too. But it is a change. Innovation is part of human nature: we’re all inherently innovative and can learn to be even more innovative. There’s no such thing as “I’m not creative enough”. Curiosity can be trained out of us, but it can also be trained back in. Keep in mind too that innovation doesn’t always lead to better ideas.

We also can’t forget that lifting the thinking outside of the box requires providing an environment where it feels safe to voice ideas that might be considered outrageous, crazy or expensive. Everyone’s opinion counts, and everyone’s opinion is valuable.

Summary

The problem in my mind is two-fold. First, there is what I see as a stagnation, which I would almost describe as compliance. Maybe testing has become compliant? Are we too prone to agreeing and obeying rules and standards, standards that are often forced upon us from outside of the testing community? Part of the solution I believe to be follow-your-nose testing. What I’m asking for is that we try to invent new approaches to testing, or re-invent testing. Is there another way of testing this? Why are we testing it this way? Why are we not testing it this way instead?

Then there is the teamwork aspect. Testing tends to often take place in isolation, even in agile environments. Testers should do most testing, and I strongly believe that there is still a tester role on an agile team, but everyone should participate in test planning. Testing just isn’t an isolated activity!

Everyone needs to work together to:

- Define what quality means to us

- Identify product risks

- Provide input on test planning

If it’s all about the team, does this mean we don’t need dedicated testers anymore? No. Testers need the input from all different roles, and hence perspectives, but the actual task of testing still requires a special skill set. Testers are trained to have a different mindset than other roles. Testers have unique knowledge and experience of typical failures, weak points, etc. Testers are user advocates. But testers are not on the other side of the wall – they’re part of the team. Testers don’t break software – testers help build better software.

What about the Revolution?

A revolution is “a dramatic and wide-reaching change in the way something works or is organized or in people’s ideas about it”.

I believe we need a big change – we need to change how we think about testing. But who will start the revolution? Regardless of your current role, I want you to be part of it – help me revolutionize testing. You can make a difference – your ideas could be what will make testing take the next evolutionary step.

1 http://en.wikipedia.org/wiki/Moore%27s_law

2 Your Creative Power, Alex Osborn, Charles Scribner’s Sons, 1948

3 http://www.newyorker.com/magazine/2012/01/30/groupthink

To summarize:

To summarize:

While, there is no doubt that all three organizations had a strategy, the presence and eventual predominance of Apple had a devastating effect on both Nokia and Blackberry.

While, there is no doubt that all three organizations had a strategy, the presence and eventual predominance of Apple had a devastating effect on both Nokia and Blackberry.

So what is needed to ensure that our strategies survive contact with the enemy, which in the context of a business strategy would be a competitor?

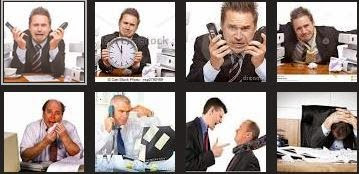

So what is needed to ensure that our strategies survive contact with the enemy, which in the context of a business strategy would be a competitor? In the absence of clear and unifying mission and vision objectives, the Quality profession tends to fall into a destructive pattern which I refer to as Quality “Indig” Nation. Rather than focus on the tasks at hand, the participants will devolve into these damaging archetypes which can impair and undermine the overall strategy.

In the absence of clear and unifying mission and vision objectives, the Quality profession tends to fall into a destructive pattern which I refer to as Quality “Indig” Nation. Rather than focus on the tasks at hand, the participants will devolve into these damaging archetypes which can impair and undermine the overall strategy. Tribalists: The Quality profession does not have a homogeneous background, but has evolved through the combined efforts of different types of practitioners with their particular expertise. However when one of these groups loses sight of the greater good and mutual respect, and seeks to dominate at the expense of the others, it is like the person with the hammer who sees all problems as a nail. Rather than addressing the overall problems, the emphasis is on promoting a particular concept or set of practices, and opposing balance and diversity of the solution.

Tribalists: The Quality profession does not have a homogeneous background, but has evolved through the combined efforts of different types of practitioners with their particular expertise. However when one of these groups loses sight of the greater good and mutual respect, and seeks to dominate at the expense of the others, it is like the person with the hammer who sees all problems as a nail. Rather than addressing the overall problems, the emphasis is on promoting a particular concept or set of practices, and opposing balance and diversity of the solution. Symphony of Work: This approach requires that all participants are aware of their particular part in the overall effort. In this context, the timing is as important as the overall fulfillment, as there are many interdependencies.

Symphony of Work: This approach requires that all participants are aware of their particular part in the overall effort. In this context, the timing is as important as the overall fulfillment, as there are many interdependencies. Accountability: In any venture, it is important to have a work breakdown structure which clearly reveals the parties responsible and accountable for fulfillment of that strategy. From this structure and framework, the interdependencies are revealed and can be managed. With accountability, expectations can be refined so that future estimates will be more accurate.

Accountability: In any venture, it is important to have a work breakdown structure which clearly reveals the parties responsible and accountable for fulfillment of that strategy. From this structure and framework, the interdependencies are revealed and can be managed. With accountability, expectations can be refined so that future estimates will be more accurate. Acceleration: If the strategy is being successfully deployed, the the next step is to accelerate the implementation to increase the scope, scale, and impact of the strategy.

Acceleration: If the strategy is being successfully deployed, the the next step is to accelerate the implementation to increase the scope, scale, and impact of the strategy. This document reflects a convergence of practices into a common category. This viewpoint is corroborated in similar peer-reviewed publications.

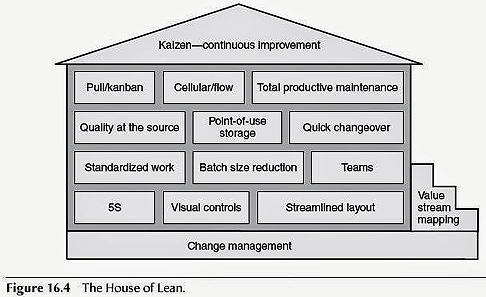

This document reflects a convergence of practices into a common category. This viewpoint is corroborated in similar peer-reviewed publications. The “House of Lean” captures the key practices and characteristics of Lean, and also establishes the scope and limitations of Lean as a singular approach. For overall improvement, Lean is not the sole solution, but one of several practices recommended by the authors.

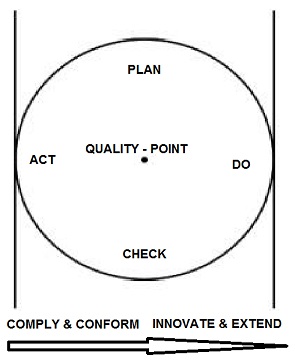

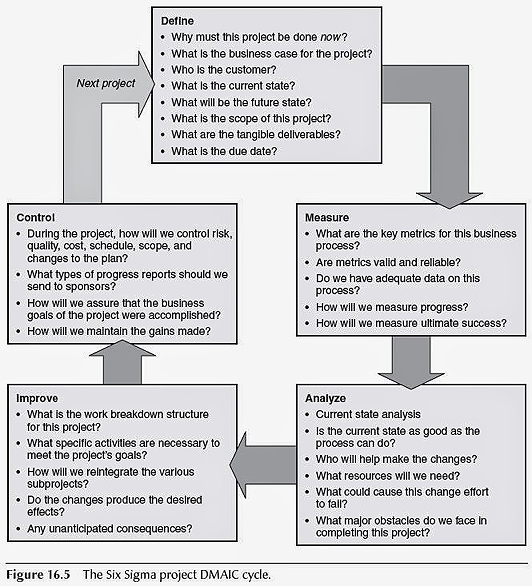

The “House of Lean” captures the key practices and characteristics of Lean, and also establishes the scope and limitations of Lean as a singular approach. For overall improvement, Lean is not the sole solution, but one of several practices recommended by the authors. More complex improvement initiative require the thoughtful and deliberate diagnostic approaches reflective of a Six Sigma project, as shown in the image of the Define-Measure-Analyze-Improve-Control cycle. One particular question within the Improve category (What specific activities are necessary to meet the project’s goals) channel directly into the proven, tactical Lean techniques and practices. In this way, Six Sigma and Lean are not conflicting, but complementary and synergistic activities that when conjoined, enhance and expand their respective effectiveness.

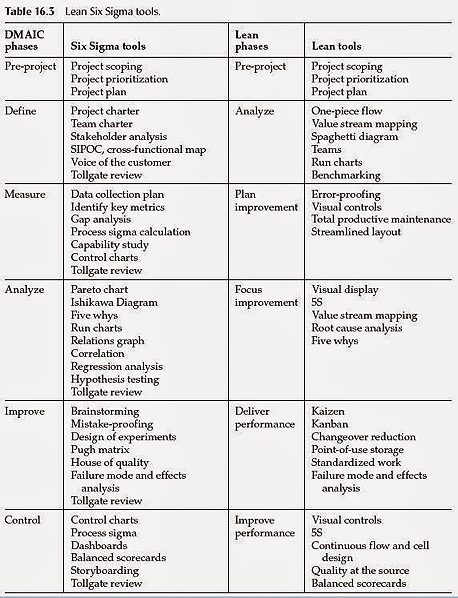

More complex improvement initiative require the thoughtful and deliberate diagnostic approaches reflective of a Six Sigma project, as shown in the image of the Define-Measure-Analyze-Improve-Control cycle. One particular question within the Improve category (What specific activities are necessary to meet the project’s goals) channel directly into the proven, tactical Lean techniques and practices. In this way, Six Sigma and Lean are not conflicting, but complementary and synergistic activities that when conjoined, enhance and expand their respective effectiveness. By having a unified Body of Knowledge, the respective advantages of Lean and Six Sigma can be combined. Rather than mutually exclusive “either/or” scenarios, the Quality practitioner can select and apply the most appropriate and relevant practices to fit the situation and serve the best interests of the client or organization. Many of the practices listed in the table above are common (i.e. failure mode and effects analysis, run charts, five whys method) or use common methods (i.e. brainstorming, kaizen).

By having a unified Body of Knowledge, the respective advantages of Lean and Six Sigma can be combined. Rather than mutually exclusive “either/or” scenarios, the Quality practitioner can select and apply the most appropriate and relevant practices to fit the situation and serve the best interests of the client or organization. Many of the practices listed in the table above are common (i.e. failure mode and effects analysis, run charts, five whys method) or use common methods (i.e. brainstorming, kaizen).