As testers, we tend to be analytical thinkers by nature. This is a valuable quality in our work, but how often do we take note of how we exercise those skills in other areas of our life?

The following is a true story of three software testers attempting to install a wireless doorbell system and the parallels between this task and a typical software development process.

Requirements

The requirements for this project were pretty simple. In an office with an exterior door to the street, without a dedicated receptionist, a system was needed to notify staff whenever somebody entered the office.

Implementation

To satisfy these requirements, an off-the-shelf product was selected. The chosen product was advertised as a “portable wireless chime & push button” which included the following features:

• 6 chime tunes with CD quality sound and fully adjustable volume control

• Individually coded bell push button to avoid interference with other users

• 450 ft. range (137 meters)

• Low battery indicator

• Easy installation – no wires and no need to program the included bell push button

Easy installation was the biggest selling point for this particular unit. (It was also the source of what would prove to be a false sense of optimism.)

With the product chosen and purchased, the integration work for this system (installation) would be done by internal staff. Because installation was a multi-step process, we made sure to test it at each step.

Unit Test

The first step was to test each of the individual components while they were laid out on a table. These components included:

• A set of door magnets connected to a wireless transmitter

• A separate wireless push button for the exterior

• A base unit with a wireless receiver and chime

Initial unit tests all passed. Pressing the exterior push button unit activated the chime as intended and so did separating the door magnets. It seemed we were ready to proceed with the integration.

Integration Test

The next step involved mounting one of the two door magnets, the wireless transmitter and the exterior push button, then testing that they were still able to activate the chime on the base unit. At this point, everything continued to work as expected, so it was time for the full deployment.

Smoke Test

After mounting the second door magnet and the base unit, deployment was complete and the system was ready for an initial smoke test. Since the door magnets were the highest priority items, they were the first component to be tested. To perform the test, we would simply open the door and confirm that the chime on the base unit activated.

Unfortunately, the actual behaviour that was observed differed from the expected behaviour. In this case, nothing happened at all. It would seem that we had a defect.

Isolating the Defect

In order to correct the issue, we first needed to investigate and determine the root cause. Our first hunch was that, when attached to the door and frame, the magnets were no longer making contact. Adjusting their positions and forcing contact proved that this was not the cause after all.

Next, we considered that the metal door may be interfering with the magnets, but removing them from the door disproved that theory as well.

We thought that perhaps there was some other interference between the wireless transmitter and the base unit, which was now installed on the other side of the room. We removed the base from the wall and placed it near the transmitter once again, eliminating that as possibility.

Did a wire come loose when we were installing the components? No, that wasn’t it. Maybe the transmitter and receiver had somehow become un-paired? No, that wasn’t it either.

The list of possibilities was shrinking quickly. What else could possibly be causing the problem? Then, we had a thought. What if the battery in the transmitter was dead? That seemed improbable though, because we had already tested each of the components previously. Still, it was worth testing again just to be sure.

Using the battery from the exterior push-button unit, we tested the theory and Eureka! That was it! After all the setup, testing and troubleshooting we had done, a single dead battery was the cause of all our problems.

Summary

Looking back, what did we learn through all this? We learned that, similar to the process of isolating a defect in the code we are testing, an analytical approach to troubleshooting helps us quickly eliminate possible causes of a problem and narrow in on the true root cause.

On the other hand, our trial-and-error approach to troubleshooting meant that we investigated many other possible causes before isolating the real issue. By following a different approach, might we have found the issue even more quickly?

In this case, the same team was involved in all phases of the project, from selecting the product, to performing the integration and, ultimately, performing the testing. Is it possible that our detailed knowledge of the system affected our perspective on the situation? As testers, we sometimes assume complicated root causes and the risk of this can increase as we become more knowledgeable about the inner-workings of the system we are testing. This highlights the value of the external perspective offered by a team of independent testers.

We also learned the importance of challenging assumptions. In our case, we initially assumed that the batteries that were included with the unit were fully (or mostly) charged. Had we continued to accept this assumption as fact, we would never have found and corrected the issue at all.

And, finally, we learned while testers may be good at testing software, doorbell installation may best be left to professionals from other disciplines.

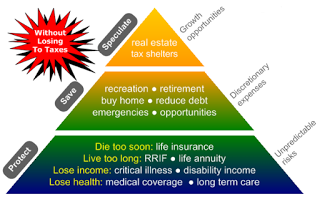

This part of the sales pitch was intended to engage the prospective customer, and pinpoint their level of financial need and sophistication. Based on that need, the rest of the sales approach could be adjusted to respond to the preferences and priorities of the customer, leading to a sale of an appropriate financial package.

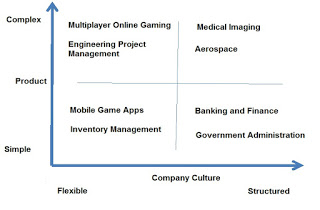

This part of the sales pitch was intended to engage the prospective customer, and pinpoint their level of financial need and sophistication. Based on that need, the rest of the sales approach could be adjusted to respond to the preferences and priorities of the customer, leading to a sale of an appropriate financial package. The outcome would be a quadrant chart reflective of the types of organizations and software applications. This knowledge would be helpful for me as an instructor to know how to connect and establish the relevance of my subject material, and helping my students to connect what they learned in the classroom with their workplace challenges.

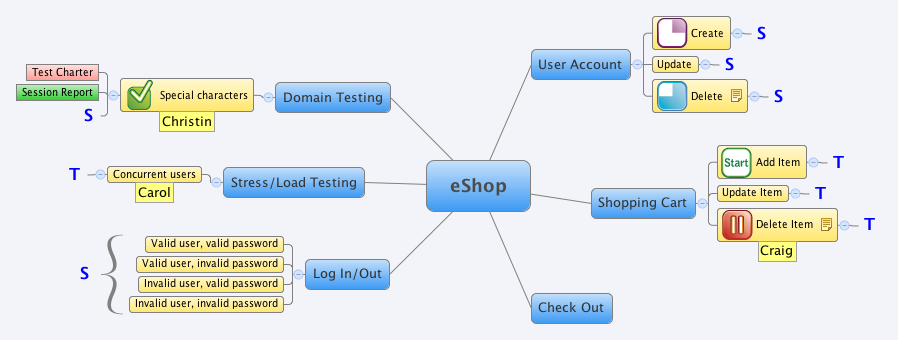

The outcome would be a quadrant chart reflective of the types of organizations and software applications. This knowledge would be helpful for me as an instructor to know how to connect and establish the relevance of my subject material, and helping my students to connect what they learned in the classroom with their workplace challenges. Figure 1: Example of an xBTM mind map. Here, an e-shop is being tested and the mind map shows some of the threads. For example, “Create user account” is being tested in a session, whereas “Add item to shopping cart” is being tested as a thread.

Figure 1: Example of an xBTM mind map. Here, an e-shop is being tested and the mind map shows some of the threads. For example, “Create user account” is being tested in a session, whereas “Add item to shopping cart” is being tested as a thread.