I started my testing career as part of a user group that did analysis, technical writing, training and testing in the Systems Department of an insurance company at a time when having nontechnical people involved in software development was very unusual. We provided informal requirements and told the programmers when the results were wrong. We tested based on things we had done ourselves in the branches, starting at a very basic function level as the code was written and continuing through to end to end testing. At that time, none of our applications were integrated. Management decided when the system was due, based partly on advice from our supervisor and partly on when it was needed.

Some years later, finally in an environment where testing was a job and a career path, I registered for a certification program that covered software quality from many different angles, including, but not limited to, testing. My formal training in IT was very limited and I studied a lot of different areas. Like most certifications, this one had predefined right answers for the exam. Unlike many of them, you had to figure out what the answer was yourself. The organization was very clear on one point: there was a right way to do things. I finished the course with a lot more knowledge, a better idea of what I was doing and a set of blinders that came from knowing I was the Quality Police. My job, in my mind, was to ensure that things were done properly and I tried to do it well. I fully expected that an application would go in to Production when, and only when, the Test Team approved it.

Some of us aren’t very good at wearing blinders; after a while, mine became uncomfortable. Do you ever think about why we build software? The popular answer seems to change every few years. Sometimes there is a strong belief that the craft itself is more important – and certainly more interesting – than the use of the application to the people who pay for it. That becomes a hard sell eventually, and we swing back to the other extreme, where business departments hire their own technical staff to do things the way they are told. Technical debt causes enough trouble after a while that we swing back the other way, again to an extreme.

We are dependent enough upon technology now that IT can’t be in competition with the business if we want to succeed. We need perspective, an understanding on both sides of why each group’s opinion has value. Some things have to go in on the specified date, whether or not we like it, things like tax rate changes and any sort of regulatory change. Some applications are life critical. Others are business critical; lives may not be lost but the company may go out of business. Customer expectations drive any web application and the quality often reflects the speed.

I have gone from seeing myself as the Quality Police to seeing myself as a reporter. My job is to report what I see and what I don’t see. Sometimes, part of that is saying that we have not even looked at some areas, along with my assessment of how high a risk that creates. Sometimes, it involves arguing over testing scope with a project manager who does not want to look past the edge of our application or our change to see what the affect might be on another application and the company as a whole. Standing on a soapbox saying ‘I am the Quality Police and you have to do what I say’ has never been very effective. Soapboxes in the middle of the road only create a road block. We all do better if we hear about things early, rather than at the last minute. We all do better when we are listened to, both from the business perspective and the IT perspective. We all do better when we have choices, and we need to provide alternatives. As reporters, I think that our real job is communicating with all concerned with respect for their positions and understanding of their problems from their own point of view. We must be reporters who remember why we do this work if we want to do it well.

The risk is to the business and the decision should be theirs. It needs to be an informed decision. Businesses that hire and listen to reporters have a better chance of success. We need to work together to succeed.

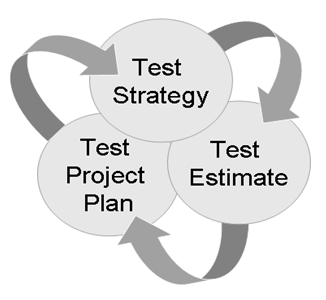

The Test Strategy, the Test Estimate, and the Test Project Plan are all co-dependent on each other which causes a cyclical dependency between the three artifacts. ie: a change in any one of the three artifacts should result in a review and possible update of the others.

The Test Strategy, the Test Estimate, and the Test Project Plan are all co-dependent on each other which causes a cyclical dependency between the three artifacts. ie: a change in any one of the three artifacts should result in a review and possible update of the others.